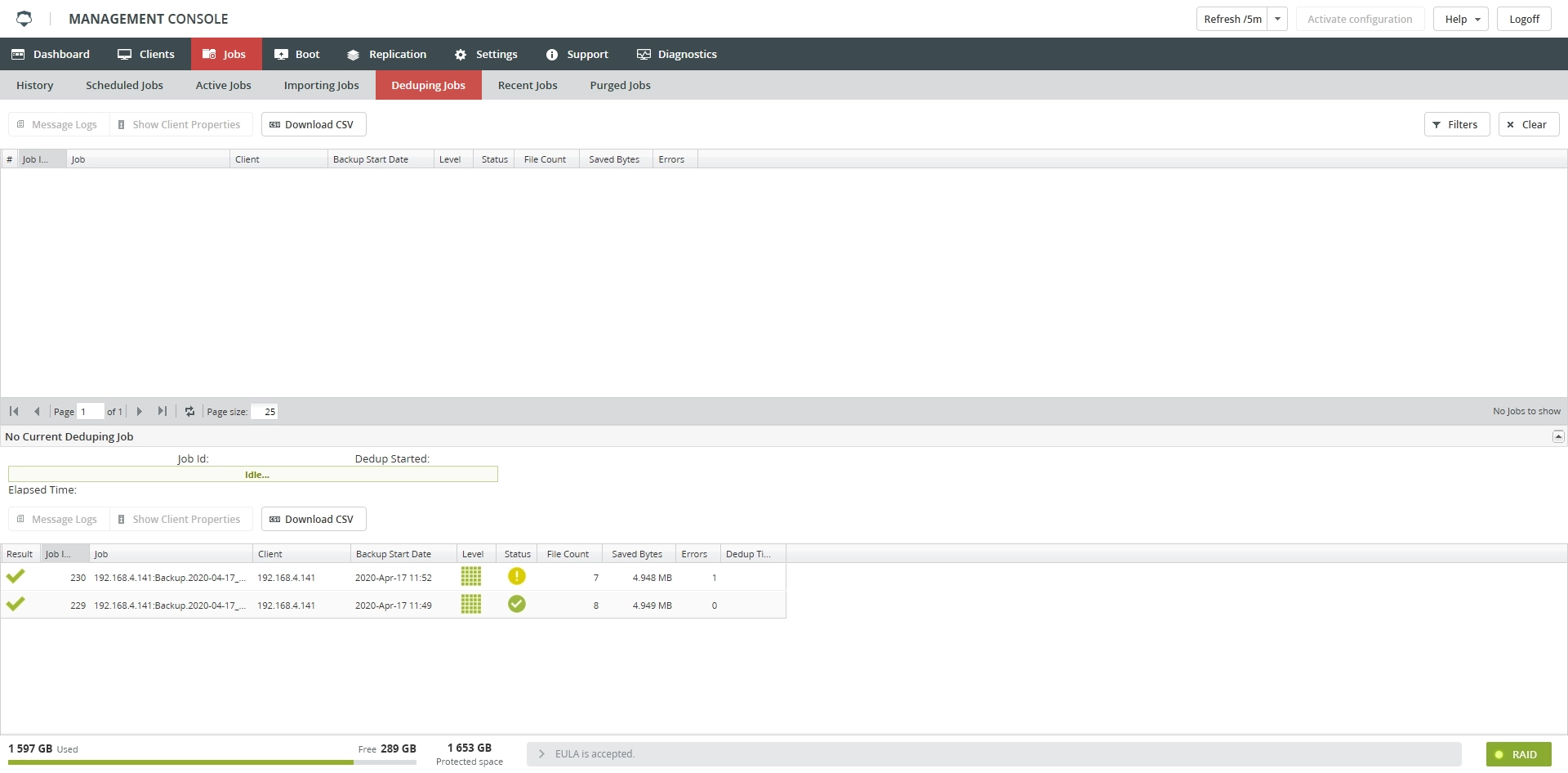

Deduping Jobs subtab

Overview

The Deduping Jobs subtab lists backup jobs that have been deduplicated, meaning that only one copy of a given file is kept in the backup.

The screen lists jobs that are pending, the job currently being deduplicated, and jobs that were most recently deduplicated.

Currently, Infrascale offers a post-process deduplication feature. This requires a backup job’s data to occupy space on your RAID while deduplication occurs. Deduplication occurs by reading the backup job data and copying unique files to a repository (that is also on the RAID) where duplicate files can be referenced rather than copied. During this time, both the original backup job data and repository copies of unique files are on the RAID. In the worst case, (if a backup job consists entirely of unique files), free space equal to the backup job’s size is required for the repository copies. Consequently, prior to running a backup job, which will be deduplicated, the amount of free space required to backup and deduplicate may be as much as double the size of the data to be backed up. When deduplication completes, the original backup job data is then deleted from the RAID.

Given these requirements, the system checks the following before deduplicating a backup job on the RAID:

There must be at least 1.5 GB free space on the RAID.

There must be more free space on the RAID than the size of the backup job.

For example, if a 100 GB backup job completes, the system requires at least 100 GB of free space to begin the deduplication process.

If your appliance is nearing its full capacity, you can try these suggestions to allow successful deduplication:

Stagger the full jobs of large clients.

The time between each job should account for its backup, importing, and deduplication time. For example, if you have 3 clients that each take 30 minutes to backup, import, and deduplicate; schedule the full backups at least 45–60 minutes apart. The length of time it takes to backup and deduplicate varies with the size and number of files.

If a single client full backup is too large and will not deduplicate, we recommend you break the client into two or more separate clients, each with a non-overlapping file set that has roughly a balanced amount of data.

For example, if you have a client SRV with 1 TB of data on

C:and 1 TB of data onD:, create a SRV-A client withC:/in its file set, and a SRV-B client withD:/in its file set. Stagger these clients’ schedules per the above recommendation. Separating the data in this manner will require a minimum of 2 TB of free space instead of 4 TB.

Information

Information on the Deduping Jobs subtab is presented in the table format with the following columns:

| Column | Values | Description |

|---|---|---|

| Result | Result of the job deduplication | |

| Job Id | Unique identifier of the job | |

| Job | Name of the job | |

| Client | Name of the client the job belongs to | |

| Backup Start Date | Date and time the backup started | |

| Import Start Date | Date and time the importing started | |

| Level | Backup level of the job | |

| Full backup | ||

| Differential backup | ||

| Incremental backup | ||

| Status | Status of the job | |

| Successfully Completed. The job completed successfully. | ||

| Completed with errors. The job completed, but might have some problems. | ||

| Error terminated. The job did not complete because of the errors. | ||

| File Count | Number of the backed up files in the job | |

| Saved Bytes | Number of bytes saved to appliance | |

| Errors | Number of errors in the job | |

| Dedup Time | Time the deduplication took |

Actions

All actions on the Deduping Jobs subtab are available

on the toolbar

in the job context menu

| Action | Description |

|---|---|

| Message Logs | View the detailed log of the job progress |

| Show Client Properties | View details about the client the job belongs to |

| Download CSV | Save data shown in the table locally in a CSV file |

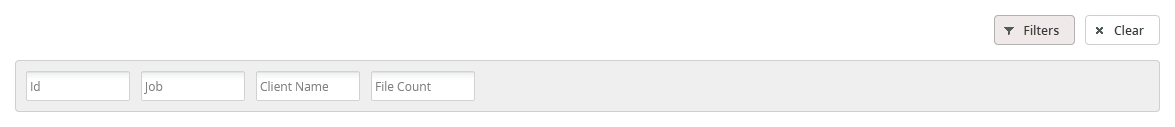

Filtering

You can refine the list of jobs using filters. Filtering is available only for jobs in progress, but not for the completed.

To show all filters available on the Deduping Jobs subtab, click Filters on the toolbar.